Human Error

Alexandre D'Amours - 2020-05-12

1. Introduction

Professional interest in human error seems to go back at least 100 years. The first industrial engineers, having optimized machines to the highest degree, turned their attention to the human element. A simple "pass or fail" boolean model of human action was used to try and better understand why humans make mistakes.

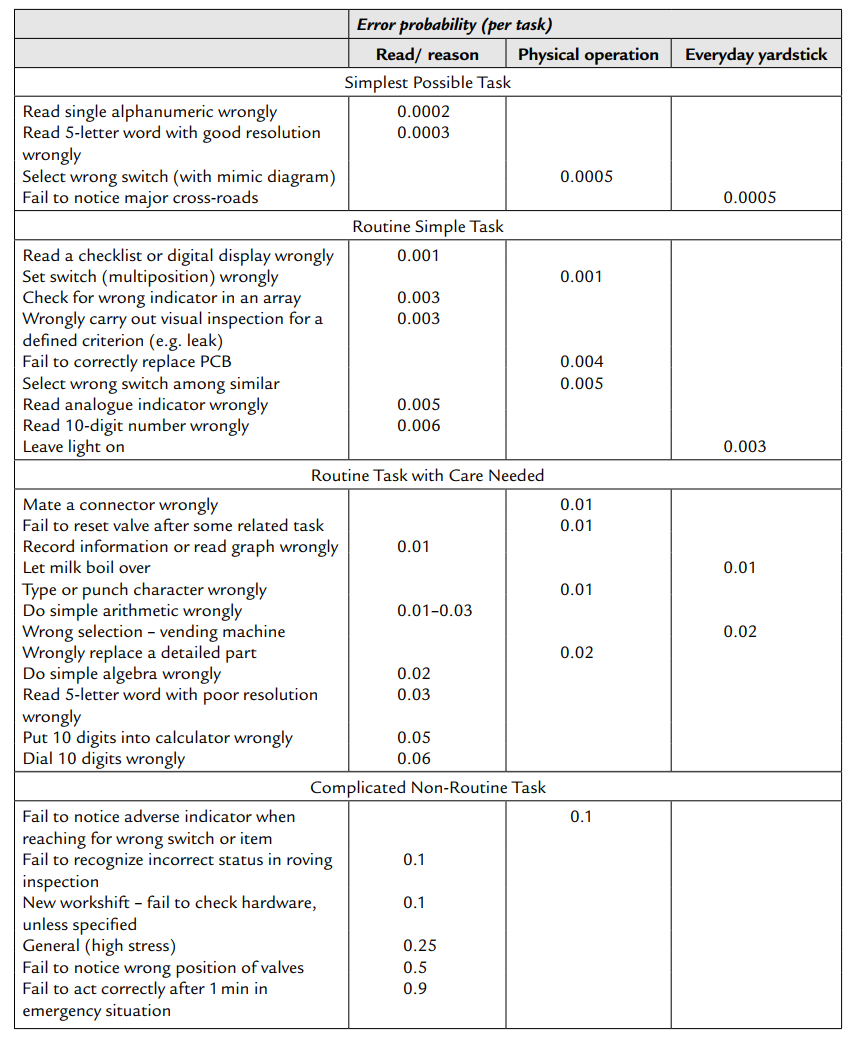

Between the 1960s and 1980s, interest in human error grew as the nuclear, aviation and health care industries became increasingly complex and interdependent[1]. Rates of error were measured for the average human doing multiple tasks in different environments, as shown in Table 1[2].

Table 1: Error probability for different tasks[2].

Since the early 2000s, renewed interest in human error in ever increasingly complex systems has led to the development of the new field of resilience engineering. Professionals working in complex fields like engineering or health care can fail in a myriad of different ways. But more often than not, they are able to self-correct and develop ways to self-monitor. The term "Safety-II" has been coined to describe research into how things go right as well as how things go wrong in complex systems[1]. This more holistic approach is still relatively young and interested readers are encouraged to read the works of M. Erik Hollnagel.

2. Structural Engineering

Structural engineering is not the only field dealing with public safety which uses mathematical equations to model the world. However, it is one of only such fields in which prototyping and instrumentation are not extensively used to validate calculations. Most of structural engineering projects are custom designed and built without any field-testing or validation of calculation hypothesis.

At first glance, this seems problematic. All humans make mistakes, and structural engineers rely almost exclusively on mathematical calculations to size their buildings. Is there not a single point of failure? If so, why isn't structural collapse more common?

Perhaps structural engineers are simply better at avoiding errors. Maybe they review all of their calculations and catch all their mistakes every time. Let's use some simple statistics and a "Safety I" approach to try and gain insight into what kind of risk structural engineers are dealing with.

Perhaps structural engineers are simply better at avoiding errors. Maybe they review all of their calculations and catch all their mistakes every time. Let's use some simple statistics and a "Safety I" approach to try and gain insight into what kind of risk structural engineers are dealing with.

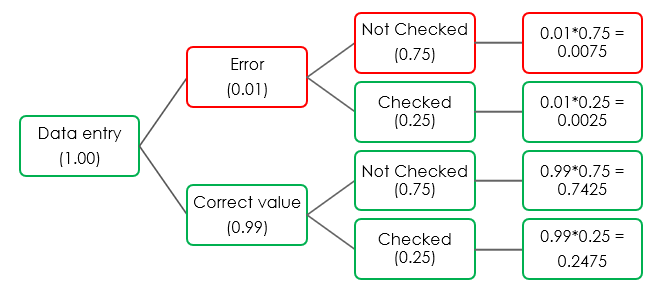

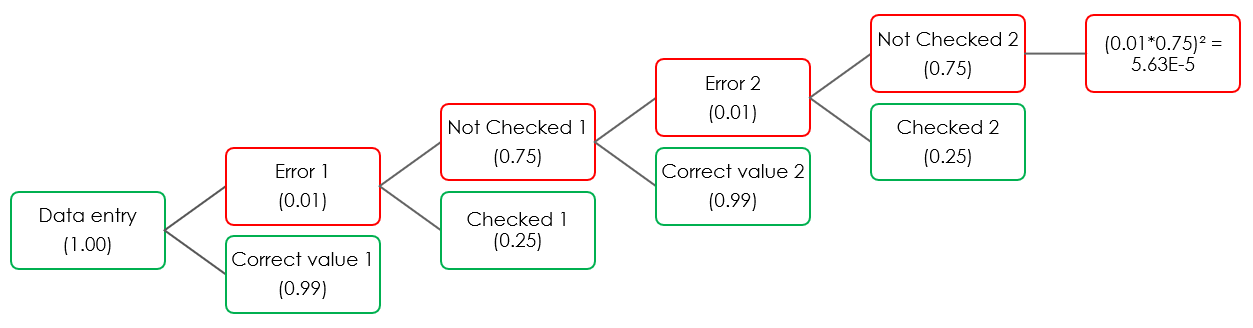

Just to understand how the binomial distribution works, let's run an example calculation. Let's postulate that a subject can enter data with 99% reliability. He then waits for a couple of days and rechecks 75% of his data entries. If he finds erroneous data, he is able to detect it and correct it 100% of the time. The probability of mistakes making it through his checking process is of 0.75%, as is demonstrated in the top branch of the probability tree illustrated in Figure 1. If the subject were to enter 1000 values, we would expect on average 7.5 unchecked errors.

Figure 1: Probability tree example for a single user

How can we find average data entry error rates for structural engineers? A simple approximation can be taken from Table 1. According to this data, routine tasks, like inputting characters into a computer, have a 1% error rate. Extremely simple tasks, like turning on a switch when asked to, come in at 0.1%. Let's say structural engineers are 10 times as reliable as that. That would be 0.01% error rate, or 1 mistake for every 10000 entries.

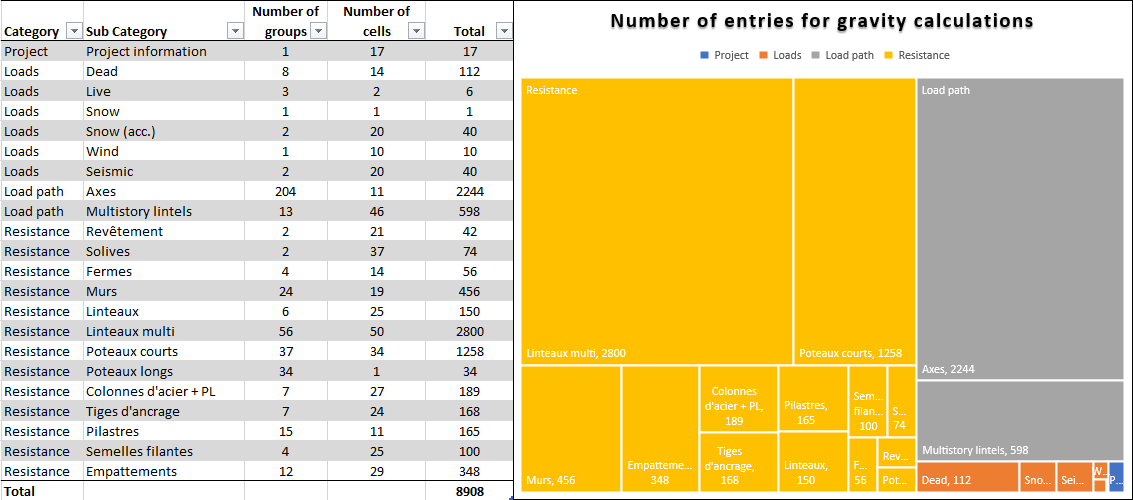

How much data entry are structural engineers actually doing for a given project? For a standard wood light frame 4-story project, we counted the number of excel cells that were updated for the gravity spreadsheet using a simple macro. The total number of entries, visible in Figure 2, is approximately 9000. Let's say we round this off to 10 000 entries per project.

Figure 2: Gravity workbook entries

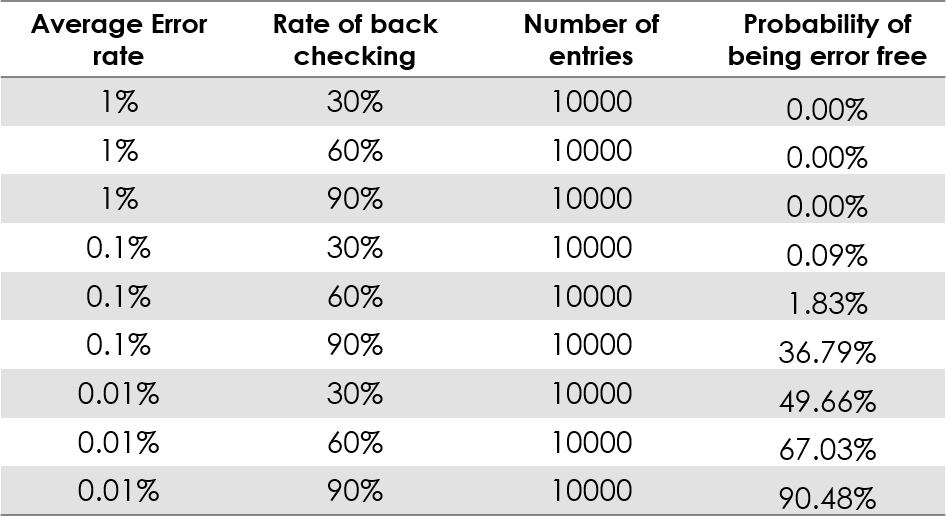

At what rate are structural engineers back-checking their data entry? Most engineers check at variable rates, depending on multiple factors (number of mistake already found, time of day, pressure to finish the project, etc.). Let's take three different rates to get a full picture: 30%, 60% and 90%.

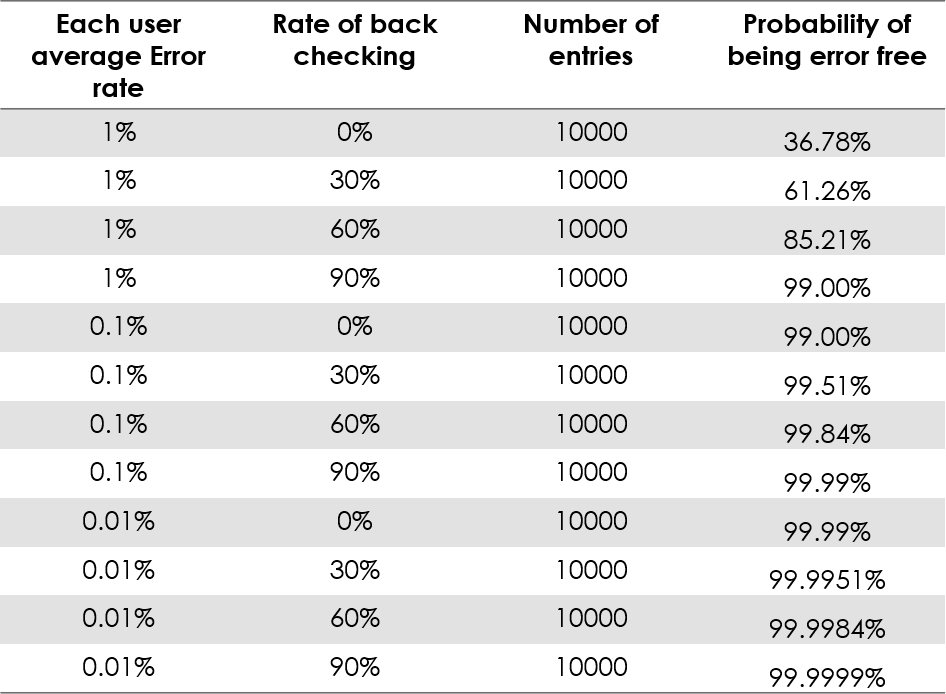

Table 2: Single user probability of being error free (binomial distribution)

Table 2 paints a bleak picture. With an error rate of 0.01% and a back checking rate of 90%, there remains a 10% chance of having made at least 1 mistake. This might strike a familiar chord for young engineers or interns that are just starting out. When you have no intuition of the magnitude of the numbers you are looking for, it's quite rare to come to the end of a complex calculation with the right answer. Often times it is surprising how many mistakes can be made in a seemingly simple calculation.

As we have stated previously, this model is overly simplistic and does not take into account many factors. Engineers do not blindly enter data into spreadsheets. There is a continuous prediction and validation going on inside the engineer's mind. Also, while reviewing, many experienced engineers report having an "instinct" or "gut-feeling", which causes anything unusual to "jump out" without having to review all of the data. However, at the"Safety-I" level of analysis, we aren't analyzing what engineers do right (not yet!), simply at what rates they fail and how we can reduce the impact of these failures.

3. Fixing what isn't broken

In North America, we hardly ever hear about catastrophic structural collapse (where the exceptions, see Hyatt regency walkway collapse, seem to prove the rule). It seems structural engineers aren't making as many mistakes as our simplified model would suggest.

Here, "Safety II" type thinking makes more sense. One of the main problems with trying to understand complex systems with a "Safety I" type approach is that the safer you are, the less data you have to analyze (as you are focusing only on past mistakes that lead to collapse). Let's start with a (homemade and incomplete) list of historical reasons why a structural engineer's mistakes would not result in structural collapse, that is, a list of why things "go right".

- Ductile materials (most materials used in structural engineering) will deform and cause other visible problems before failing.

- Safety factors (overestimating loads, underestimating material resistance).

- Built in redundancy.

- Overly simplified models: Neglected joint stiffness, neglected stiffness of structural and non-structural elements, neglected bonding and friction, etc.

- Unofficial error detecting mechanisms

- In-house, mistakes can be detected by an experienced technician, colleague or reviewer.

- Mistakes can be detected by another experienced professional (architect).

- Mistakes can be detected by an experienced fabricator.

- Mistakes can be detected by an experienced contractor or subcontractor.

- Mistakes can be detected by an experienced site inspector.

This list is by no means complete, it simply highlights some of the reasons why some mistakes might not lead to structural collapse. Now let's have a look at some current trends that might be eroding the structural engineers' success story.

3.1. Ductile Materials

Research into fibre reinforced polymers and epoxy bonding is in full swing. This has the potential of being problematic, especially if we don't understand how important ductility has been to our profession in the past. Ductile materials have built-in redundancy. They don't only absorb seismic energy, they absorb mistakes, bad workmanship and degradation. To what degree this has aided structural engineers' track record remains to be seen.

3.2. Safety Factors

The trend has always been to push for more efficiency in design. Researchers develop more precise (and complex) ways of calculating loads and more precise values for material resistance. There is no reason to think this trend is about to reverse.

Also, the lack of collapse in our everyday work creates an asymmetry in our intuition. Intuition is most useful when you learn as much from failure as by success. Most engineers never see first-hand what catastrophic failures look like or witness when they have gone too far in their pursuit of efficiency. And with good reason. However, asymmetric reinforcement and practical drift [4] lead to asymmetric intuitions (e.g., overestimating factors of safety).

3.3. Built-in Redundancy

Structural engineers are pushed (by contractors, other professionals, clients or competition between firms) towards more efficient designs. Unsupervised and/or inexperienced engineers are more susceptible to compromise on good practices under such pressures. The average experience of engineers is on the decline, as we will see in point #5.

3.4. Over simplified models

Advances in structural engineering software allows engineers to take in account more and more details (e.g., non-linear material deformations, 2D shells with cracking analysis, 3D time history seismic loading, etc.).

3.5. Unofficial error detecting mechanisms

With the baby-boomer generation heading for retirement in North America, a lot of experience is slowly being drained from firms. Efficient error detection requires good intuition, which is built with years of experience. It is logical to infer that less experience will lead to more mistakes.

Also, central 3D modelling is removing the need to redraw or rebuild models from scratch at each step of the design. While often seen as a useless burden on the building construction workflow, the repetition in drafting and modeling might be an important error detecting mechanism.

This is by no means a "Safety II" type analysis of the structural engineering profession. We simply borrowed the idea of looking at what's going right to help better understand where things might go wrong. One might then wonder if anything practical can be done to reduce risk without falling back to the pen and paper era. One avenue we might explore is repurposing a time-tested structural engineering trick: redundancy.

4. Artifical Redundancy

Redundancy is a basic concept in structural design, but it can be applied just about anywhere. In his book "Black Swan", professional risk analyst Nassim Nicholas Taleb makes a case for increasing redundancy in complex systems, such as the global market[3]. As engineers, we are trained to pursue efficiency and eliminate redundancy. However, like in structural design, overly efficient systems are inherently fragile systems.

To follow up on our example, say we decide we want to add a simple redundancy: two users running the same calculations on the same software. Both people are considered to be 100% independent. At the end of the calculations, we have software that compares every entry point of both our users. If there is a difference, they talk it through and resolve errors 100% of the time. The updated version of the top branch of the probability tree is shown in Figure 3 and the updated probabilities of being error free are shown in Table 3.

Figure 3: Probability tree example for two users (top branch only)

This time, the probability of mistakes making it through the double-checking process is of 0.00563%. If the subjects were to enter 1000 values, we would expect on average 0.056 unchecked errors (more than a 100x decrease compared to the single user).

Table 3: Double user probability of being error free

Because we considered both users statistically independent, the odds of both of them making a mistake in the same place (or rate of collision) is quite low. Comparing probability of being error free for the single user input (Table 2) and the double user input (Table 3) is surprising, to say the least. For an average error rate of 0.1% and 30% back checking, we go from 0.09% to 99.51%. For that specific case, we have a thousand-fold increase in probability by only (theoretically) doubling the man-hours.

This model does not include the "risk of collision" of errors themselves. There is one way of being right, but there are infinitely many ways of being wrong, and if two mistakes are distinct, the software would also detect them. This is a bonus layer of error detection that is not computed in our current model.

Granted, there are many challenges to resolve before implementing such systems in practice, but the point remains. Partial redundancy can be implemented simply by adding users (which, in theory, could be working in parallel) and gives high returns on security. The probability of unchecked errors with "i" data entering users is given in Figure 4, with some quick notes of how each variable impacts the end probability. P(E) is the average user error rate, P(NV) is the average user backchecking rate, i is the number of independent users and N is the entry count.

Figure 4: Probability of unchecked mistakes with "i" independent data entering users.

Thinking of redundancy as positive (safety mechanism) as well as negative (time-consuming) will help us move forward without "throwing out the baby with the bath water". Replacing simple tasks like preliminary calculations (redundant) with software that enables quick 3D modeling (efficient) might seem like a no-brainer, but it is a riskier practice in the long run. This does not mean that we cannot move towards more efficient ways. Rather, we must strive to find and replace what is lost in the efficiency trade-off.

5. Conclusion

In short, structural engineers are in many ways being pushed towards riskier practices. Before turning towards a more efficient practice, they must have a thorough understanding of how their old practice actually worked (see Chesterton's fence).

The world is highly competitive and efficient software is being developed at a rapid pace. We must find ways of maintaining redundancy, not only in our designs, but also in our everyday workflow. The alternative is to move towards efficiency (fragility) without question, and possibly be forced to react to our failures rather than leading with our foresight[5]. We leave you with a quote from N. Taleb[6]:

Redundancy is ambiguous because it seems like a waste if nothing unusual happens. Except that something unusual happens—usually.

6. Sources

- Hollnagel, Erik, "From Safety-I to Safety-II: A White Paper", 2015

- Smith, Dr. David J., "Reliability, Maintainability and Risk - Practical Methods of Engineers", 8th Edition, 2011

- Shivers, C. Herbert, "The Parable of the Boiled System Safety Professional: Drift to Failure", 2011

- Petroski, H., "Success Through Failure: The Paradox of Design", 2008

- Taleb, N. N., "Antifragile: Things That Gain From Disorder", 2012